What Does “Real-Time” Data Look Like? A Guide for CTOs and Architects

May 27, 2025 | DiffusionData

In the evolving landscape of modern data-driven applications, the demand for real-time data has never been higher. From enabling instant customer insights to powering automated decision-making systems, real-time data is a cornerstone for competitive advantage. But what exactly does “real-time” mean from an architectural standpoint? And how can CTOs and system architects design infrastructures that truly deliver it?

This article dives deep into the technical realities of real-time data, exploring pitfalls of outdated approaches like polling, and highlighting essential capabilities such as scalable data delivery, smart filtering, millisecond latency, and delta streaming.

The Problem with Outdated Polling

Many legacy systems rely on polling to simulate real-time data. Polling involves repeatedly querying a data source at fixed intervals (e.g., every few seconds) to check for updates. While simple, polling is inefficient and introduces significant latency. Key drawbacks include:

- Increased Network Load: Frequent requests lead to wasted bandwidth, especially if data hasn’t changed

- Latency: The system only knows about changes after the next poll, causing delays

- Scalability Issues: As user count grows, polling overhead multiplies, leading to performance bottlenecks

For true real-time data, polling is fundamentally outdated. CTOs and architects must move beyond this model. Push updates are the solution.

Scalable Data Delivery: Beyond the Basics

Real-time data delivery requires scalability to handle unpredictable volumes and velocity of incoming events.

Event-Driven Architectures

Adopting event-driven architectures is crucial. Instead of clients polling for changes, systems push updates via messaging queues or streaming platforms (e.g., Apache Kafka, AWS Kinesis). This:

- Minimises unnecessary data transfers

- Supports horizontal scaling

- Enables multiple consumers to subscribe independently

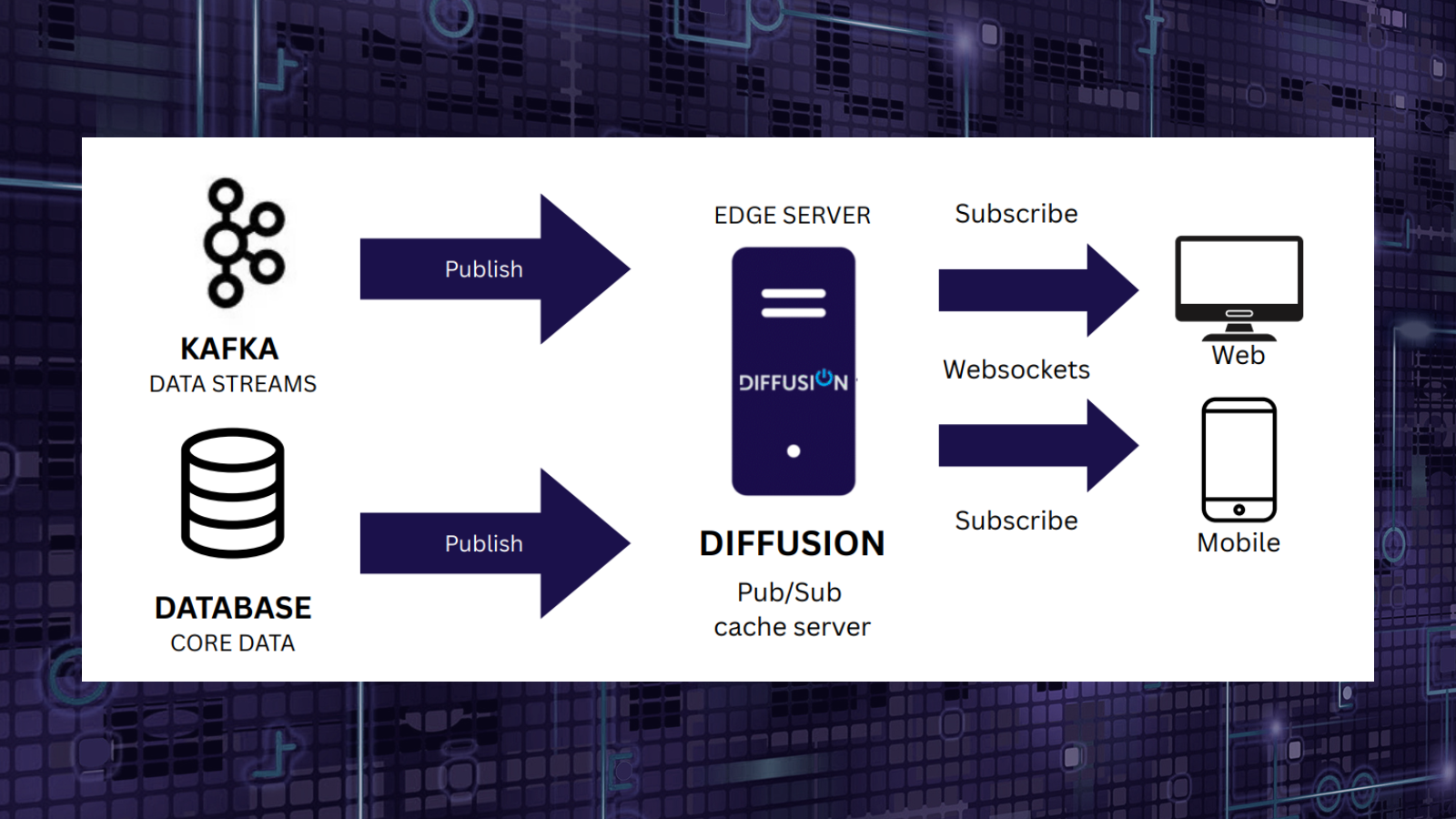

Publish-Subscribe Models

Using publish-subscribe (pub/sub) models allows selective data dissemination where only interested parties receive relevant updates. This enhances scalability and resource utilisation.

Smart Filtering: Delivering What Matters

Not every data change is relevant to every consumer. Smart filtering ensures that clients receive only meaningful updates, reducing noise and bandwidth. Filtering can happen at multiple stages:

- Server-Side Filtering: Based on user preferences, roles, or subscriptions

- Delta Filtering: Sending only changes rather than full data snapshots

- Context-Aware Filtering: Leveraging metadata to prioritise critical updates

Implementing smart filtering reduces system load and accelerates data consumption.

Millisecond Latency: The Gold Standard

For many real-time systems, especially in financial trading, IoT, or online gaming – latency measured in milliseconds is the target. Achieving this requires:

- Optimised Network Paths: Using edge computing, CDNs, and low-latency protocols (e.g., WebSockets, gRPC).

- Efficient Serialisation: Compact data formats like Protocol Buffers or Avro to reduce transmission time

- High-Performance Processing: In-memory data stores, parallel processing, and hardware acceleration

Architects must design pipelines end-to-end with latency in mind, measuring and optimising every hop.

Delta Streaming: Sending Only What’s Changed

A core principle in real-time data delivery is delta streaming, transmitting only the differences (deltas) between data states rather than entire datasets. Benefits include:

- Reduced Bandwidth: Smaller payloads mean faster delivery

- Faster Processing: Clients apply incremental updates rather than reprocessing everything

- Lower Storage and Compute: Less data to store or re-transmit

Delta streaming requires systems capable of tracking data changes at a granular level and efficiently encoding them.

Putting It All Together: What Real-Time Data Looks Like Today

- Data is pushed to clients via event-driven, pub/sub platforms rather than pulled

- Systems employ smart filtering to deliver precise, relevant updates

- The delivery pipeline supports millisecond-level latency with optimised networks and processing

- Data updates are transmitted as deltas, minimising payload size and improving responsiveness

- The entire infrastructure is scalable, able to handle spikes and growth without degradation

Conclusion

For CTOs and architects, understanding what “real-time” data truly entails is crucial for building future-proof systems. Moving away from outdated polling models and embracing scalable, filtered, low-latency, and delta-based streaming is the path forward.

By designing with these principles in mind, organisations can deliver on the promise of real-time data, enabling faster insights, smarter automation, and ultimately, a stronger competitive edge.

Try Diffusion Cloud for free and experience the power of real-time data delivery: Sign up for free